A joint venture in technology development between researchers at

The developed technology has already been adopted by two companies. As a result of widespread interest in advancing this technology, A&M researchers have an on-going industry research and development consortium funded by eight oil production and service companies and won a grant from the National Science Foundation.

The groundbreaking Texas A&M technology was developed in a project managed by the Office of Fossil Energy's National Energy Technology Laboratory, which provided the research funding for this effort. Total investment was US $890,000 with the government share of $630,000 and the university provided cost-sharing of $160,000 for this 3 year research project.

This approach adapts sophisticated computer modeling to the personal computer, using "Generalized Travel Time Inversion" technology. The developed software makes reservoir modeling capabilities feasible for small domestic producers. It will save time and money in predicting the location of such bypassed oil and in planning its recovery.

More than two-thirds of all the oil discovered in

Much bypassed oil lies in difficult-to-access pockets. Predicting the location and size of these elusive, compartmentalized deposits is costly because it often requires complex computing capabilities. Many independent producers aren't able to commit the personnel or buy the expensive supercomputer time required to build and operate the models needed to find and produce these overlooked stores of oil.

The A&M research effort engineered a cost-effective way to streamline computer-generated reservoir models. It provides significant savings in computation time and manpower.

Reservoir characterization identifies "unswept" regions in these mature fields containing high oil or gas saturation. In this process, geoscientists first employ computer models to develop an accurate picture, or characterization, of a productive oil reservoir. "History-matching" is then used to calibrate the model by correlating its predictions of oil and gas production to a reservoir's actual production history.

A key input to the history-matching process is data from tracer tests, in which traceable gases or liquids are injected into a well to determine the paths and velocities of fluids as they move through the reservoir. This information helps reservoir engineers calculate how much oil remains in the reservoir and determine the most efficient methods to sweep this residual oil from the reservoir.

In the Texas A&M project, researchers developed a novel, computerized method for rapidly interpreting field tracer tests. This innovation promises a cost-effective, time-saving solution for estimating the amounts of remaining oil in bypassed reservoir compartments. The new method integrates computer simulations with history-matching techniques, allowing scientists to design tracer tests and interpret the data using practical PC-based software -- a process that is much faster than conventional history matching. The cost and time savings coupled with the streamlined model and accessible PC-based tools make the technology feasible for small independent producers.

Recommended Reading

From Satellites to Regulators, Everyone is Snooping on Oil, Gas

2024-04-10 - From methane taxes to an environmental group’s satellite trained on oil and gas emissions, producers face intense scrutiny, even if the watchers aren’t necessarily interested in solving the problem.

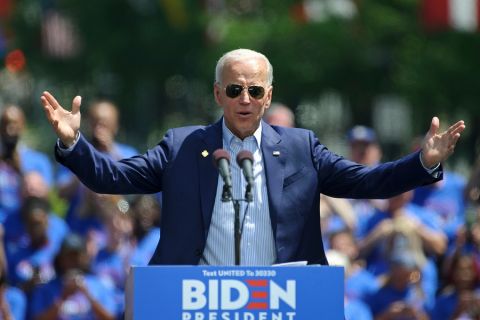

Pitts: Producers Ponder Ramifications of Biden’s LNG Strategy

2024-03-13 - While existing offtake agreements have been spared by the Biden administration's LNG permitting pause, the ramifications fall on supplying the Asian market post-2030, many analysts argue.

Hirs: SEC’s Enhanced Climate-related Disclosures Are Unnecessary—Even According to SEC

2024-03-15 - The SEC’s rationale for enhanced climate-disclosure rules is weak and contradictory, says Ed Hirs.

Exclusive: Dan Romito Urges Methane Mitigation Game Plan

2024-04-08 - Dan Romito, the consulting partner at Pickering Energy Partners, says evading mitigation responsibility is "naive" as methane detection technology and regulation are focusing on oil and gas companies, in this Hart Energy Exclusive interview.

Hirs: LNG Plan is a Global Fail

2024-03-13 - Only by expanding U.S. LNG output can we provide the certainty that customers require to build new gas power plants, says Ed Hirs.