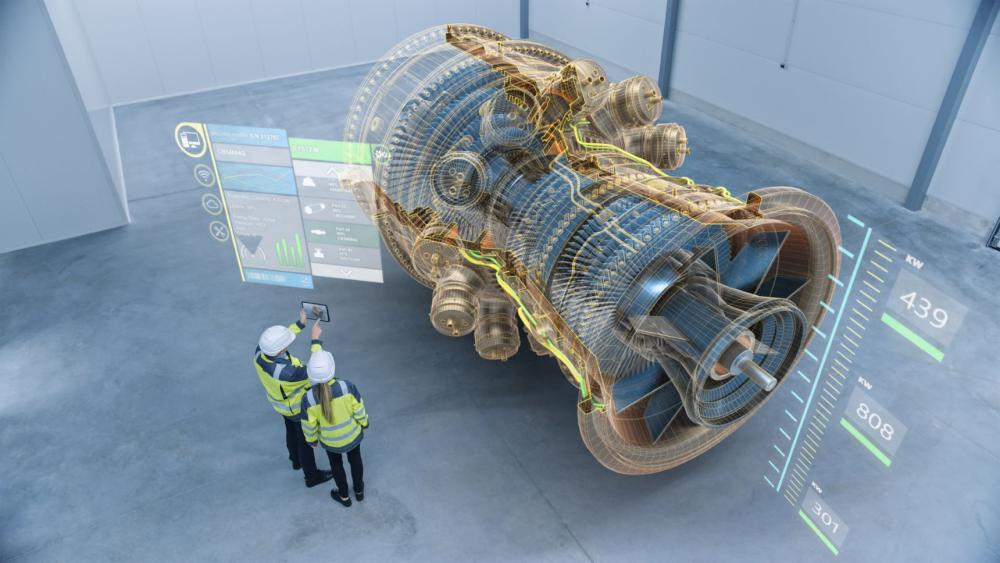

CogniteAI allows users, regardless of coding background, easy access to complex industrial data, saving time and streamlining operations. (Source: Cognite)

Artificial intelligence has infiltrated everyday life from Siri’s jokes to Google search results to suggestions for the quickest commute home. And while AI in the oil and gas industry is becoming more common, using generative AI, which is capable of creating text, images or other media, could transform how the industry operates.

At least, that is what Jason Schern, field CTO at Cognite, asserts.

To that end, CogniteAI has built Cognite’s artificially intelligent software-as-a-service (SaaS) solution to improve onshore operations. Its generative AI-powered capabilities are part of its cognitive data fusion solution — employing a digital twin to mirror operations. CogniteAI is designed to provide access to complex industrial data by answering questions posed through natural language. As Schern puts it, the system “allows users to independently go ask questions of the data and gather insights without having to be dependent upon a lot of other people.”

CogniteAI is able to provide these insights through contextualized data. In situations where data scientists or engineers might be needed for information or answers, CogniteAI can provide an answer or solution immediately. Ready access greatly reduces the time needed in onshore operations, Schern said.

“If I'm a field engineer on site and I see what appears to be a leak, I can start accessing information about that asset, right? [I can learn about] the valves and what work orders have been done recently right there from my mobile device instantly, and then I can start with appropriate phone calls to go get additional help as needed,” he said.

CogniteAI’s generative properties make it different from traditional machine learning and deep learning. Machine learning works by making observations based on statistical models built from predictive data sets, while deep learning uses AI to observe data, figure out the data that is predictive and build a model that helps find that information.

Generative AI takes an entirely different approach.

“Generative AI can take my natural language question, understand what I'm asking for, and if it has access to the contextualized information, it can actually go either write the code to give you that answer or navigate that knowledge graph to get the answer for you,” Schern said.

Most AI used in onshore applications today use some form of predictive machine learning, since it helps with preventative maintenance. CogniteAI isntead uses large language models (LLMs) that are capable of understanding natural language questions to provide responses that go beyond the data it was trained with. These responses include proprietary industrial data not normally available to an LLM when formulating a response.

“When you're trying to interrogate and gain new insights from existing data, the language models can help you,” Schern said. “When data has been contextualized, we can use a combination of prompt engineering and knowledge graphs to ground a large language model into giving accurate and deterministic answers to the questions asked of the data.”

Cognite uses a privatized and secure version of OpenAI to ensure proprietary data used along the platform isn’t shared publicly, minimizing information leaks and loss of data. The platform also uses various methods to verify information and limit hallucinations from nonexistent content — a concern when dealing with generative AI.

One way is through prompt engineering or giving the LLM a set of criteria.

“You can actually tell the large language model to not make anything up,” Schern said. “For example, if you use the large language model's ability to write code, then you use prompt engineering to say ‘in response to this question, write the code that's going to give the response.’ It won’t make anything up, it's just writing the code that you would've normally had to write yourself if you knew how to write code, which in this situation, you don't know.”

Scalability still needed

Many new technologies have to cross the threshold of scalability before making their way to the oilfield. However, Cognite is able to get around this problem because of its core capability: contextualization.

“Scalability really comes down to a couple of factors,” Schern said. “One is the data that you're providing to the large language model needs to include context. Context provides an LLM with the detail required to use its natural language processing capabilities to provide a deterministic response.”

Second, scalability requires making ssure than an LLM can rapidly provide the contextualized content needed to respond.

“Interactive-level user performance of an LLM requires nearly instantaneous delivery of the proprietary industrial data that an LLM should use when formulating an answer, so the large language model can do its work and respond to me almost immediately,” he said.

Beta versions for CogniteAI have been available to a small group, with general availability coming this quarter. Since the platform is a SaaS solution, releases and updates are available to be added at any time, as Cognite’s product roadmap features different capabilities coming online throughout 2023 and 2024.

Among new features is the ability to ask a natural language question to write and execute code for a solution to a problem. Within the next quarter, Cognite plans an update that will go beyond searching for keywords and instead read, interpret and understand the information in documentation to provide answers after making reasoned decisions.

Even with all the advancements being developed by CogniteAI for the onshore oilfield operations, Schern says the company plans to continue making advancements in the proliferation and use of a variety of large language models for industrial use cases.

“You're going to start seeing a variety of large language models all being used together in concert,” said Schern. “So you'd have a general purpose, large language model like OpenAI and then you might have specialized LLMs for specific tasks, but they're trained on a very specific subset of data and they are tuned to do a specific task really well.”

Recommended Reading

EY: How AI Can Transform Subsurface Operations

2024-10-10 - The inherent complexity of subsurface data and the need to make swift decisions demands a tailored approach.

E&P Highlights: Oct. 7, 2024

2024-10-07 - Here’s a roundup of the latest E&P headlines, including a major announcement from BP and large contracts in the Middle East.

How Liberty Rolls: Making Electricity, Using NatGas to Fuel the Oilfield

2024-08-22 - Liberty Energy CEO Chris Wright said the company is investing in keeping its frac fleet steady as most competitors weather a downturn in oil and gas activity.

Kolibri Global Drills First Three SCOOP Wells in Tishomingo Field

2024-09-18 - Kolibri Global Energy reported drilling the three wells in an average 14 days, beating its estimated 20-day drilling schedule.

Baker Hughes Eases the Pain of Intervention from Artificial Lift

2024-10-11 - To lessen the “pain of intervention” during artificial lift, Baker Hughes’ Primera and InjectRT services take an innovative approach to address industry challenges.

Comments

Add new comment

This conversation is moderated according to Hart Energy community rules. Please read the rules before joining the discussion. If you’re experiencing any technical problems, please contact our customer care team.