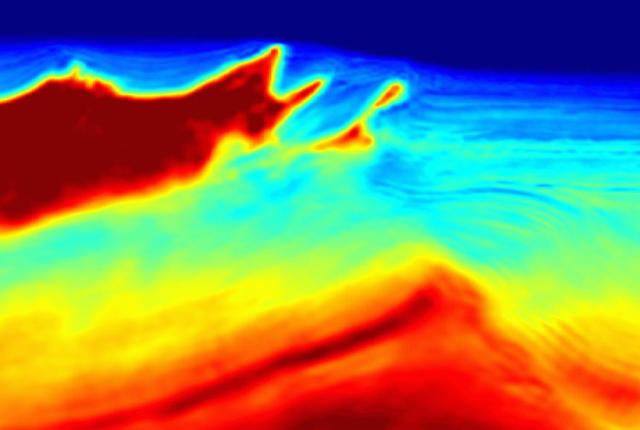

A salt dome is imaged using a relatively new algorithm called full-waveform inversion. (Source: BP)

Learn more about Hart Energy Conferences

Get our latest conference schedules, updates and insights straight to your inbox.

Presalt took all of the headlines once Petrobras and others figured out its secrets, although it’s still a very challenging environment in which to explore, drill for and produce oil and gas resources. But subsalt has been the bane of the exploration industry’s existence for many decades now.

Why? Three words: Gulf of Mexico (GoM). The GoM is arguably one of the world’s richest hydrocarbon provinces, but it’s darned hard to image. And one of the main problems is something that many people have in shakers on their tables—salt.

Salt is not like sediment. Tectonic forces can squeeze it like toothpaste and form strange subsurface formations that look like mushrooms sprouting thousands of feet below the seafloor. These formations can be imaged by seismic. The problem is the sediments below them often cannot.

Geophysicists have not been unaware of this problem, and they’ve thrown a host of technologies at these salt formations. Recently BP made an important announcement indicating that a relatively new algorithm, full-waveform inversion (FWI), might finally be able to help with the subsalt problem. E&P talked to John Etgen, distinguished adviser for seismic imaging at BP, about the evolution of subsalt imaging that ultimately led to the company discovering 200 MMbbl of additional reserves in its Atlantis Field in the GoM.

E&P: Back in the 1990s prestack depth migration was being touted as the solution to subsalt issues. Can you walk us through the evolution since then?

Etgen: In the early 1990s geophysicists realized they were using processing methods that were just not very accurate for imaging below complex geological overburdens like salt bodies. We generally applied normal moveout correction to the data early in the processing sequence and then summed all the offsets together, and we then treated the data as if they were recorded at zero source-receiver offset. But by 1990 computers were getting fast enough that we did not have to sum the data together before running the imaging step. We could image each offset volume of a 3-D survey separately and then combine and optimize the image. This is a more accurate way of creating images.

For the kinds of salt structures found on the GoM shelf/slope, this worked pretty well. There were a few genuine subsalt discoveries like the Mahogany Field discovered by Amoco, Anadarko and Phillips Petroleum in 1993. But as the ’90s wore on we realized that the ‘model’ of wave propagation being used was not accurate enough for imaging below anything but simple salt bodies, and we began to explore wavefield extrapolation migration methods.

As the 2000s arrived, techniques like reflection tomography and shot record migration were improving images quite a bit. As we got better, though, we began to realize there were several issues still holding us back. Since salt refracts acoustic waves, sometimes severely, there were often substantial differences in what one could image from different datasets that seemed to be connected to the sailing direction of the survey. So we experimented with imaging multiple datasets, each acquired at a different azimuth, to get images.

In addition, it was becoming clear that multiple reflections were causing confusion in these images. There were some processing methods like surface-related multiple elimination that were helping to remove multiple reflections from the data before imaging, and they were a significant advancement.

But as we pressed into more complex areas with even more complex salt bodies, there were still areas that we could not image. We needed advanced modeling/simulation efforts to create and test alternative seismic acquisition designs to see if we were collecting enough data with the existing datasets and, if not, what we might do to collect better datasets; that led to the next big advance.

In 2003 BP modeled its recently discovered Mad Dog Field and came to the conclusion that there was no way to image the core of the field with any existing dataset, no matter how much we assumed about the salt, both because of illumination gaps and because of a very difficult issue with removing multiple reflections. BP designed the industry’s first streamer-based WAZ [wide-azimuth] survey, first in the computer and then by executing it in the field. Initial images from that survey showed the effort to be paying off.

That success led to BP acquiring several more proprietary WAZ towed-streamer datasets at other GoM discoveries. Soon there were other operators using these same techniques and, in fact, the service industry further innovated on WAZ streamer methods to make them economically tenable for speculative surveys over open acreage.

BP also tried another new technology for wide-/ full-azimuth data at its Atlantis Field about a year later where we used the first industrial-grade deepwater autonomous nodes. BP had actually tested an academic nodal system at Thunder Horse in 2001 to create a proof of concept. In many ways the technical results from the Atlantis survey were quite similar to the results at Mad Dog: largely successful and ultimately paving the way for 4-D surveys of these complex fields in deep water. However, the economic case for shooting ocean-bottom node (OBN) data over exploration acreage was not good. Node surveys were cost-effective over a mid-sized field, but the time and complexity of node deployment and retrieval restricted them to existing discoveries and 4-D surveys.

The Atlantis experience showed us that with all of those variables, one can pretty much nail the velocity model, especially with low frequencies. It gets cumbersome to get those offsets and the azimuthal coverage with streamer vessels. With all of those ingredients: high data density, long offsets, ultralow frequencies, large surveys and full-azimuth coverage, we might be able to solve the subsalt imaging problem. I think we are only a few engineering advances away in terms of getting a robust low-frequency source and an efficient OBN deployment and retrieval system.

The 2004 isotropic velocity model (top) did not contain enough information for the additional resources at Atlantis to be imaged. In the bottom image, the salt dome is more accurately imaged. (Source: BP)

E&P: Why is FWI so important to subsalt imaging?

Etgen: There are three closely coupled facets. First, you need a good model of the salt body. But second, to get that you need an accurate model of the surrounding sediments. Third, that accurate sediment model brings interesting and valuable uplift in image quality on its own independent of the improvement in the salt body description. We saw this at Thunder Horse. You really want all these effects. When FWI works well, it does them all.

E&P: Regarding subsalt plays in general, where do you think the industry is headed next?

Etgen: Building an accurate velocity model for imaging is the most important aspect of creating good images. Yes, you need high-density long-offset WAZ (or OBN) data to create good images and see into the illumination gaps and suppress multiple reflections. But without that velocity model, you are sunk. So I think we’ll continue to see efforts aimed at making that velocity model construction exercise faster, better and cheaper. I also think one of the surprises at Atlantis was the fact that the initial huge uplift in image quality was made with a model that did not have distinct salt boundaries in it. That flew in the face of conventional wisdom about salt models. We all thought that they had to be ‘crisp’ to make good images. What seems to be true is that it’s better to be slightly smooth and close than very ‘sharp’ and even a little bit wrong. That opens all sorts of possible ways of making further improvements.

Recommended Reading

TotalEnergies Starts Production at Akpo West Offshore Nigeria

2024-02-07 - Subsea tieback expected to add 14,000 bbl/d of condensate by mid-year, and up to 4 MMcm/d of gas by 2028.

E&P Highlights: Feb. 5, 2024

2024-02-05 - Here’s a roundup of the latest E&P headlines, including an update on Enauta’s Atlanta Phase 1 project.

CNOOC’s Suizhong 36-1/Luda 5-2 Starts Production Offshore China

2024-02-05 - CNOOC plans 118 development wells in the shallow water project in the Bohai Sea — the largest secondary development and adjustment project offshore China.

US Drillers Cut Oil, Gas Rigs for First Time in Three Weeks

2024-02-02 - Baker Hughes said U.S. oil rigs held steady at 499 this week, while gas rigs fell by two to 117.

Equinor Receives Significant Discovery License from C-NLOPB

2024-02-02 - C-NLOPB estimates recoverable reserves from Equinor’s Cambriol discovery at 340 MMbbl.