Perhaps that is a little dramatic, but these words tend to convey my emotional reaction when I hear people say that they have optimized a decision. I believe that in the long run, the exclusive pursuit of optimization leads to stagnation, operational inefficiency, and loss of competitiveness. At some point we must balance competing priorities, but we are increasingly prone to optimize without having done the desired engineering first.

The trend is strongly tied to advances in computer models and statistical methods, and these are affecting the manner in which a new generation of practitioners approaches engineering design itself. If differentiating performance is important to your company, this is a trend that may be of concern to you.

Optimization is the process of pushing performance until the risk-weighted cost of failure outweighs the gain. This is true of decisions as diverse as the drawdown used to increase well productivity, the weight on bit we apply to drill faster, or the amount we increase the mud weight to better stabilize the borehole. For example, we know that increasing drawdown increases production, but in formations with low strength there is a level of drawdown that will cause the borehole to collapse. The actions we can take to improve performance are usually known. But to significantly change performance, we must first reengineer the risks that are limiting us from taking those actions.

The growth in statistical engineering methods and the ability to mine massive volumes of data are giving the appearance that statistical design methods themselves will achieve differentiating performance. But differentiating performance can only come from doing the work in a way that is fundamentally different than your competitors. By its nature, optimization is a process in which we seek the best way of doing the work based on the results of historical practices. It cannot yield new practices or designs.

In the example above, companies that have developed new deterministic methods for calculating wellbore collapse and drawdown limits have doubled well productivity relative to optimized limits based on field experience. New practices that have eliminated the risk of differential sticking have enabled fluid density to be maximized so there is no compromise between wellbore stability risks and stuckpipe risks. Drill rates have been increased three to four times above the historical optimum by redesigning the risks that limited weight on bit. In contrast, companies that optimize based on historical practices are designing to avoid the historical causes of failure and not to fundamentally change performance.

Differentiating performance starts by first developing a deterministic understanding of the physics of your limiter and then developing new practices based on that understanding. This makes sense, and most of us would assume that people naturally do this. But is it really what your organization does? There are some questions you can ask that might raise a red flag. Is historical experience used to show that one solution is better than another without a deterministic explanation of why it works better? Can the designer tell you the risks that limit performance and what they have done to change these limiters? Are your project discussions about avoiding failure or performance limiters? When a change in practices is recommended to address a risk, does management reward the initiator or prefer the optimized solution based on past experience? Do you ask, “What is most likely to succeed?” before you ask, “How can we change what is most likely to succeed?”

Statistics and optimization should be used to address uncertainty, but not until the risks that limit performance have been redesigned to expand the safe operating space for increased performance.

Recommended Reading

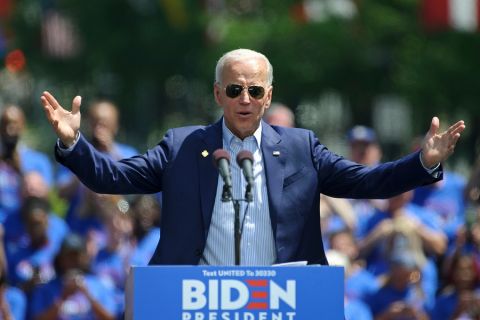

Belcher: Election Year LNG ‘Pause’ Will Have Huge Negative Impacts

2024-03-01 - The Biden administration’s decision to pause permitting of LNG projects has damaged the U.S.’ reputation in ways impossible to calculate.

CERAWeek: Energy Secretary Defends LNG Pause Amid Industry Outcry

2024-03-18 - U.S. Energy Secretary Jennifer Granholm said she expects the review of LNG exports to be in the “rearview mirror” by next year.

Biden Totters the US LNG Line Between Environment, Energy Security

2024-01-30 - Recent moves by U.S. President Joe Biden targeting the country’s LNG industry, which has a number of projects in the works, are an attempt to satisfy environmentalists ahead of the next upcoming presidential election.

Watson: Implications of LNG Pause

2024-03-07 - Critical questions remain for LNG on the heels of the Biden administration's pause on LNG export permits to non-Free Trade Agreement countries.

Pitts: Producers Ponder Ramifications of Biden’s LNG Strategy

2024-03-13 - While existing offtake agreements have been spared by the Biden administration's LNG permitting pause, the ramifications fall on supplying the Asian market post-2030, many analysts argue.