Sharp Reflections’ elastic computing software helps to interpret huge seismic data volumes. (Source: Shutterstock)

Computing advances have made it possible to interpret huge seismic data volumes, including time lapse, quickly enough that they can help operators better manage their reservoirs.

Elastic computing is at the core of analysis software service Sharp Reflections’ approach to more easily churn through prestack seismic data sets, and use cases have expanded beyond enabling operators to work with richer data sets.

Time lapse data sets contain vast volumes of data that can go stale if not interpreted and acted upon quickly. The company is working to release a new time lapse module that facilitates working with collections of multiple survey vintages, along with modules for amplitude interpretation, seismic inversion and azimuthal interpretation, according to company president and CEO Bill Shea.

“Historically, it’s been a pain to work with prestack data because it’s too big,” Shea said, noting the data is acquired with very high fidelity and that it gets boiled down and condensed by a huge amount on the way to the interpreter's desk. “A typical survey in its raw state while it's being acquired might be a hundred times more data points than what might actually wind up on the desk of the person that's looking at the data.”

That data is processed into what can be called imaged prestack seismic, using advanced algorithms that work on data from field tapes.

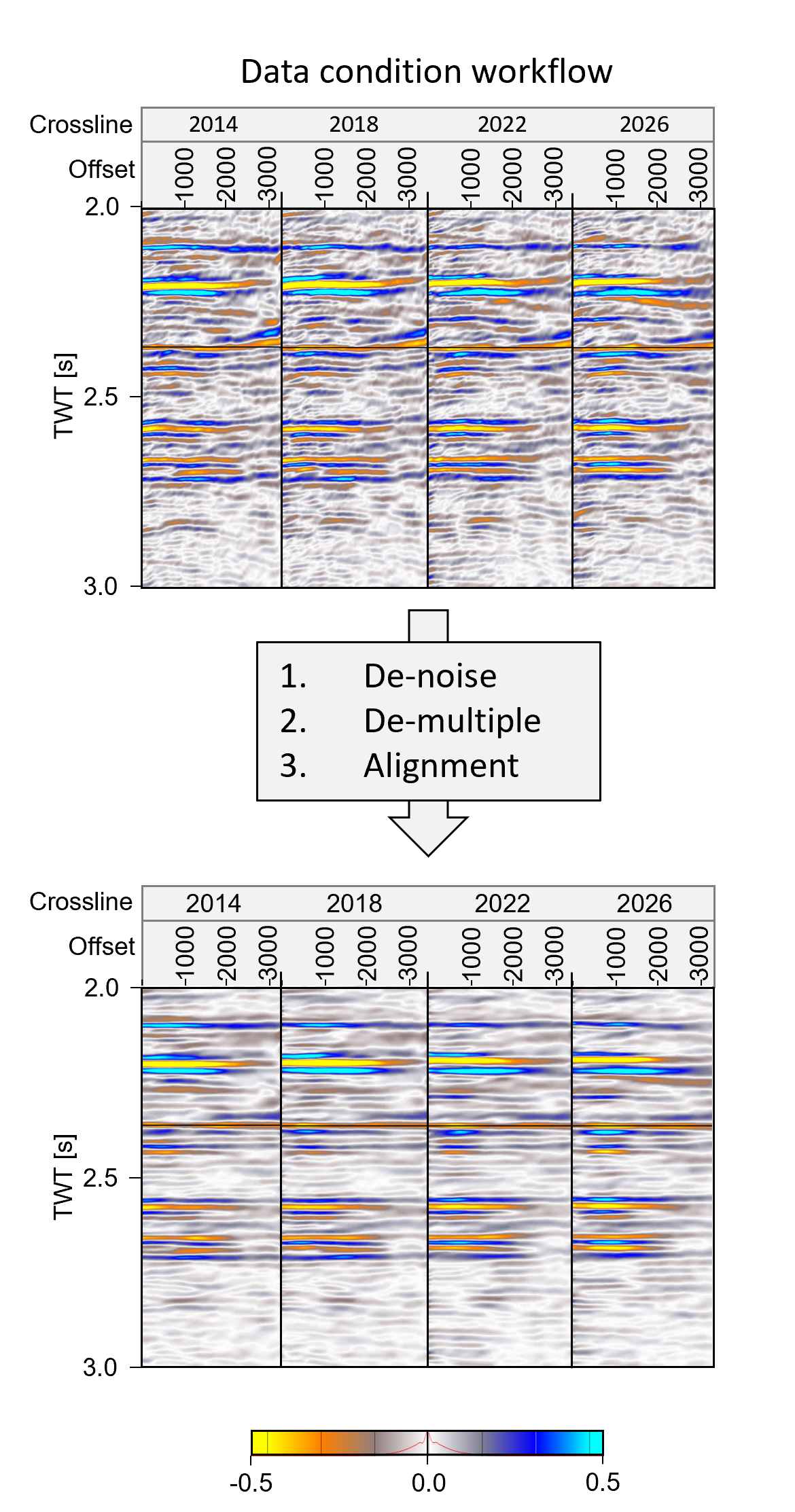

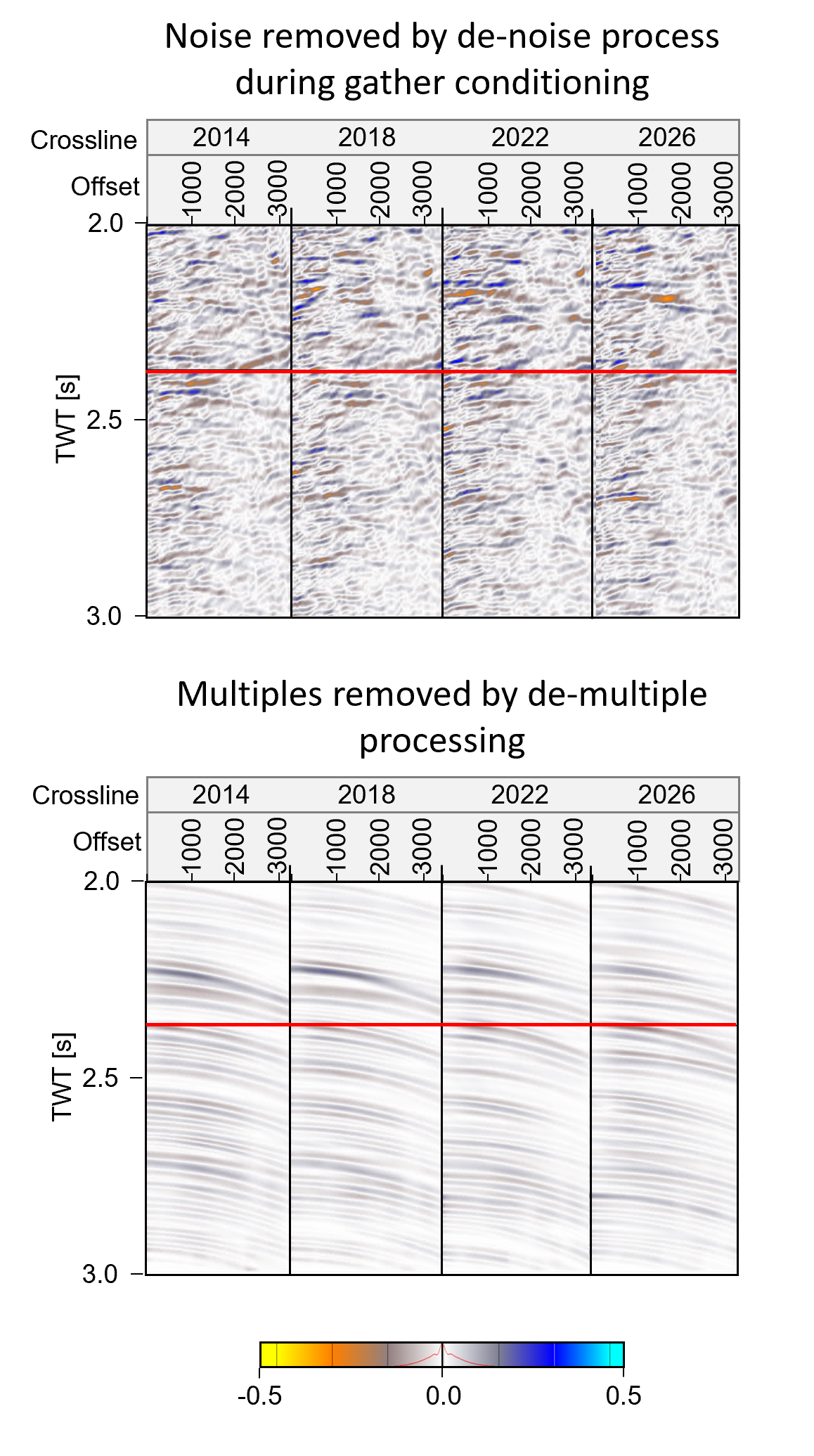

These prestack gathers are then further processed after migration to reduce data noise.

This post-migration processing is critical to get right in order to see fluid effects in the data, Shea added. Typically, there’s less than a 10% signal difference between oil- and water- filled reservoirs.

Because the vast volumes of prestack data were hard to work with, the industry long ago converged on shortcuts to make it possible to work with that data at workstations, he said.

By harnessing today’s greater compute power, those shortcuts are no longer necessary, and interpreters can more easily work with the full data set.

Core computing advances

About 15 years ago, the first multicore processing chips went on the market, following an Intel announcement that the traditional way of improving single central processing unit (CPU) performance had reached its limit and new chips were starting to melt. Multicore processing is now the standard in CPU design.

The new multicore chips made it possible to distribute the computing load onto multiple cores to crank up processing speeds. But, Shea said, the only way to exploit the speed potential of the new chip technology was to use parallel compute coding.

Sharp Reflections was formed around the time Intel announced it would make the new multicore chips, he said, and the company writes software code in a way that exploits the way those chips work.

And the company’s goal is to be able to handle bigger volumes of seismic data with the speed an interpreter needs to make timely decisions.

“If you wrote new code to that paradigm, you could have software that runs 50 times faster than it did 10 years ago, especially if you take advantage of computer clusters. If you didn't rewrite your code, you get basically nothing out of any of those extra cores,” he said.

“That's kind of creating this huge divergence today between the few companies like us that came along and said, ‘Okay, we're going to take the Intel challenge,’ and then the rest who said, ‘Well, we could probably skate by on what we have for an unspecified number of years, just not worrying about whether it gets much faster than it used to.’”

One of the results, aside from speedy processing, is that the size of the cluster needed for processing data is shrinking.

“The size of the computer that you need to do the jobs that we needed a big rack of servers to do 10 years ago is constantly shrinking,” he said. “In the future, we probably will wind up in a place where we can be back to a single workstation or server that has so much more compute power on it that it can actually handle these tasks that 10 years ago it couldn't. But we're not there yet.”

A typical prestack survey that Sharp Reflections works with starts at around 10 terabytes of inputs and may result in outputs that are three or four times that size.

“The raw data being collected by the seismic companies is just the beginning of it,” he said.

And of course computing technology is always evolving.

“We are in a new era of computing where companies like Amazon and Microsoft are all offering what they call elastic computing, which is basically bigger computers with more power and more memory that happily churn through these prestack data sets” using software like that supplied by Sharp Reflections, Shea said. “The world moves fast when technology’s on the move.”

Shifting into reservoir management

The company’s own offerings have also moved quickly, evolving from an initial project sponsored by Statoil that would carry out quality assurance on prestack data incoming from seismic contractors and prepare it for detailed quantitative interpretation to a hybrid product that was part processing and part interpretation.

Through a series of industry-funded projects, Sharp Reflections was able to continue adding to the functionality of its core offering, to create the Sharp Reflections Big Data platform product. The platform includes toolkits of capabilities, including PRO (Prestack Data Enhanced). QAI (Quantitative Amplitude Interpretation), INV (Inversion) and AZI (Azimuthal Interpretation) toolkits are already available and a 4D Time-Lapse toolkit is under development. A soft launch of the 4D toolkit started rolling out to users in October 2022, and commercial release is slated for the fourth quarter of 2023.

“Our platform is expanding rapidly, and we decided that the PreStack Pro product name no longer reflects everything we do,” Shea said. “Today the Sharp Reflections Big Data platform offers more to more people. We see a broader user group of quantitative seismic interpreters and reservoir geoscientists now using these toolkits to work on stacked datasets. Even as stacks, 4D and wide or full-azimuth datasets are too big for a standard workstation-based approach.”

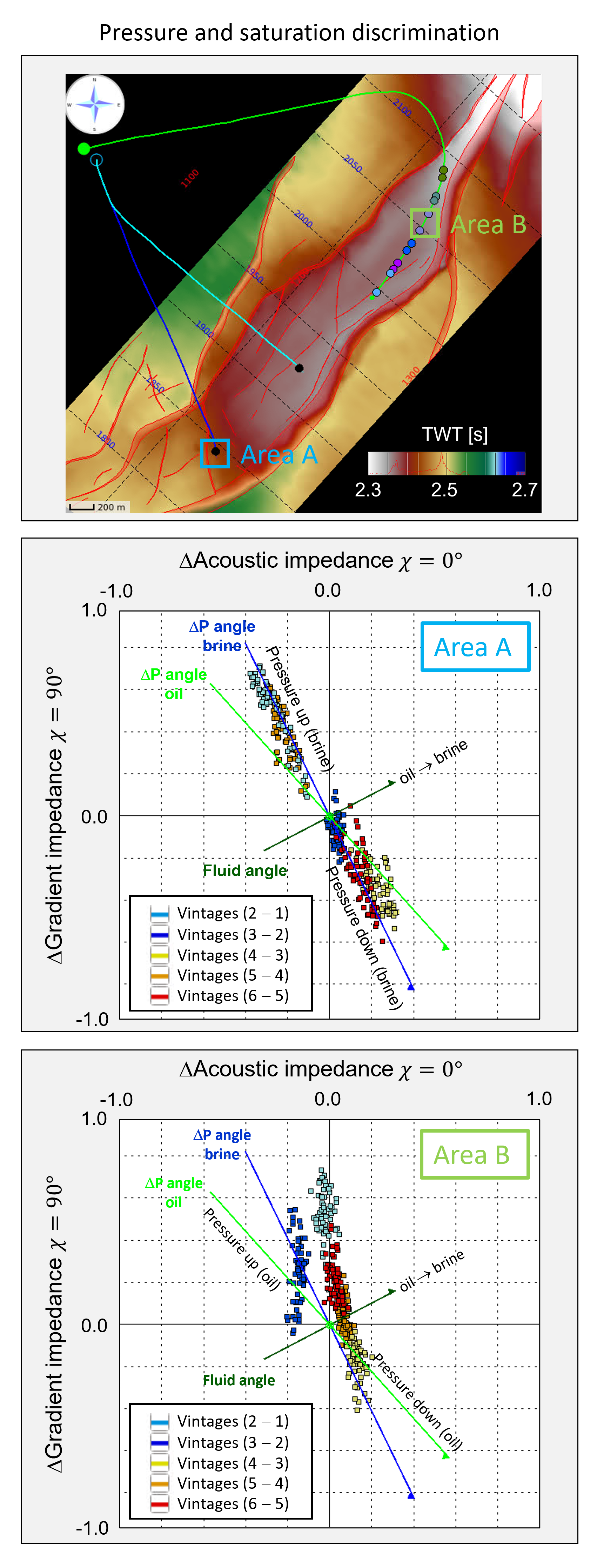

QAI sharpens reservoir insight using multistep QAI analysis, while INV improves reservoir delineation and net pay estimation. AZI improves understanding of complex geological structures, and 4D will help identify poorly drained or bypassed reservoir sections.

“The more advanced tools are for reservoir management, rather than exploration,” Shea said.

When the company started, it handled only seismic data for exploration activities with little well data involved, he said.

“We now incorporate well data directly to provide a reservoir management tool for fields that are in development,” he said.

Reservoirs across time

Part of managing a reservoir means knowing how that reservoir is changing over time.

Time lapse, or 4D, seismic helps with that. But for time lapse data to be useful, the interpreters need to be able to “digest that quickly, make sense of it and use it to plan other wells,” Shea said. “That’s a lot of data to digest, to make sense of it fast. That is a sweet spot for where our big data capability can make a difference.”

And it’s important for companies to act on time-lapse data in a timely fashion.

“Think of time-lapse seismic as fresh grocery goods. The data goes stale really fast if you acquired it and it took you two months to process it. It's only reliably giving you the basis to make drilling decisions for a few months after the day it becomes available,” he said. “After that, there's another survey coming in which you've got to jump on and start to work with.

So it's a space where faster analysis is really important because if you're too slow in the interpretation, then you basically wasted the investment in the seismic.”

Original seismic in a field before production begins can provide baseline data about the reservoir, while follow-up surveys shot with the same parameters as the original can identify production-related differences in the reservoir such as depletion and changed pressures. Such information can be used to determine optimal infill drilling patterns in older fields, he said.

For example, ConocoPhillips, which is a participant in Sharp Reflections’ current project development consortium, has more than 20 sets of seismic data over the Ekofisk Field in the North Sea.

“That creates a data deluge. How do you keep on top of 20 surveys, more than that, looking at all the differences,” he said. “They have to basically compare pair-wise each and every survey.”

That means if there are 20 surveys, there are quite a lot of combinations of pairs that could be compared and calculated, he said.

“For the interpreter, that becomes a mega battle,” Shea said.

Some of the capabilities Sharp Reflections had built into its software to handle prestack seismic are useful for keeping track of “this burgeoning collection of time lapse surveys” and making results available quickly, he added. “Previously what was as a huge sequence of pair-wise calculations is now being done in a fully automated way with a few mouse clicks. You get hundreds of maps at each reservoir level in the time that it used to take to generate two.”

Recommended Reading

Kimmeridge Fast Forwards on SilverBow with Takeover Bid

2024-03-13 - Investment firm Kimmeridge Energy Management, which first asked for additional SilverBow Resources board seats, has followed up with a buyout offer. A deal would make a nearly 1 Bcfe/d Eagle Ford pureplay.

Laredo Oil Subsidiary, Erehwon Enter Into Drilling Agreement with Texakoma

2024-03-14 - The agreement with Lustre Oil and Erehwon Oil & Gas would allow Texakoma to participate in the development of 7,375 net acres of mineral rights in Valley County, Montana.

SLB’s ChampionX Acquisition Key to Production Recovery Market

2024-04-21 - During a quarterly earnings call, SLB CEO Olivier Le Peuch highlighted the production recovery market as a key part of the company’s growth strategy.

Hess Corp. Boosts Bakken Output, Drilling Ahead of Chevron Merger

2024-01-31 - Hess Corp. increased its drilling activity and output from the Bakken play of North Dakota during the fourth quarter, the E&P reported in its latest earnings.

The OGInterview: Petrie Partners a Big Deal Among Investment Banks

2024-02-01 - In this OGInterview, Hart Energy's Chris Mathews sat down with Petrie Partners—perhaps not the biggest or flashiest investment bank around, but after over two decades, the firm has been around the block more than most.